In the expansive landscape of concurrent programming, Go, with its revolutionary feature known as goroutines, stands out as a beacon of efficiency and elegance. Goroutines, distinct from traditional operating system (OS) threads, usher in a paradigm shift in the way concurrent tasks are executed in Go programs. This comprehensive exploration aims to dissect the intricacies of goroutines, shedding light on their distinguishing features, underlying principles, and how they contribute to the efficiency, simplicity, and scalability of concurrent programming in the Go language.

Go first really piqued my interest when I found out how fast it was in it’s subdomain enumeration process, when doin bug bounty hunting. How? I wondered, that’s when I learned about goroutines. Then I thought:

Understanding the Essence of Goroutines:

Goroutines, at their core, embody a lightweight and concurrent thread of execution managed by the Go runtime. To truly appreciate their significance, it is essential to delve into the distinctive characteristics that set goroutines apart from conventional OS threads and contribute to their exceptional performance.

- User-Mode Scheduling: Elevating Efficiency to New Heights

A hallmark feature of goroutines is the concept of user-mode scheduling. Departing from the traditional reliance on the OS kernel’s scheduler, goroutines embrace user-mode scheduling. This ingenious approach allows the Go runtime to manage their execution seamlessly without the need to enter and exit kernel mode. When a goroutine is blocked, such as during network operations, the Go runtime adeptly identifies and switches to another runnable goroutine. This user-mode scheduling not only enhances efficiency but also reduces the overhead associated with traditional threading models, making goroutines agile and responsive.

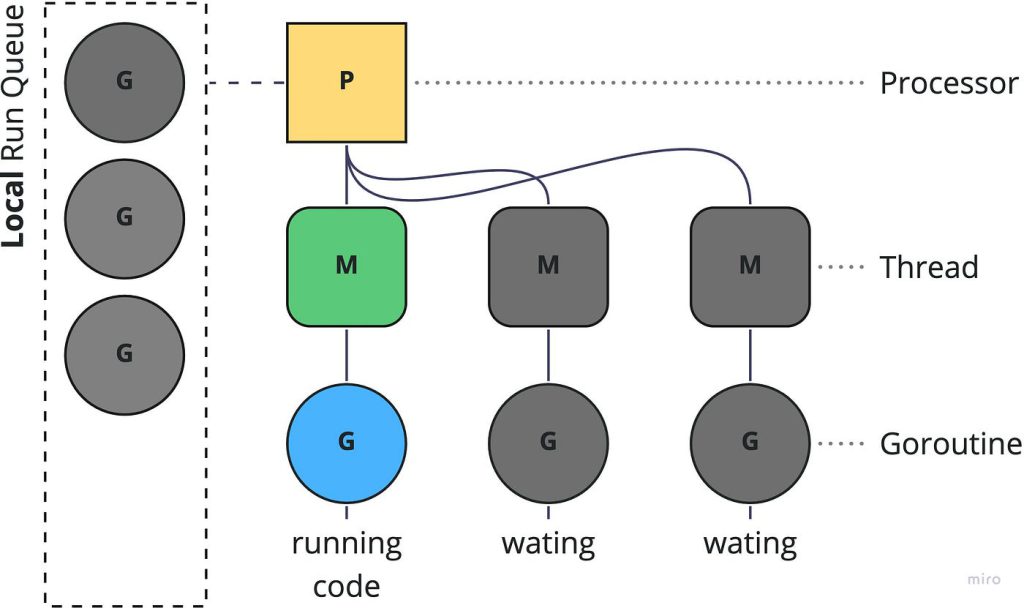

- Multiplexing onto OS Threads: Harnessing the Power of Concurrency

Goroutines leverage the concept of multiplexing, a powerful strategy that enables them to run concurrently on multiple OS threads. To fully harness the potential of multiple cores, Go dynamically initiates several OS threads, distributing goroutines strategically across them. This dynamic multiplexing ensures optimal utilization of available resources, paving the way for enhanced scalability and parallelism. Understanding this multiplexing concept is essential for grasping the intricacies of how goroutines contribute to the concurrent prowess of Go programs.

- Low-Cost Startup: Efficiency Redefined

Designed with efficiency in mind, goroutines boast a low cost to start. In stark contrast to traditional threads, which often come with heavyweight stacks, goroutine stacks initiate with modest sizes, typically a few kilobytes, and grow dynamically as needed. This deliberate design choice aligns seamlessly with Go’s commitment to efficiency, allowing developers to spawn a myriad of goroutines without incurring excessive memory overhead. The adaptability of goroutine stacks, whether the OS utilizes virtual-memory overcommit or not, adds to their versatility in handling concurrent tasks with grace and resourcefulness.

- Integration with the Language: A Symphony of Cohesion

Goroutines are not isolated entities; they are deeply integrated into the fabric of the Go programming language. Beyond the ‘go’ statement, which acts as the catalyst for initiating a new goroutine, Go provides channel types, operations, and select statements specifically designed for coordinating communication between goroutines. This seamless integration fosters a cohesive and intuitive approach to concurrent programming, enabling developers to express complex concurrent patterns with ease. The synergy between goroutines and language constructs is a key factor in Go’s unparalleled success in the domain of concurrent programming.

- Limitations Compared to OS Threading: Navigating the Terrain

While goroutines offer myriad advantages, it is essential to acknowledge their limitations when compared to traditional OS threading. Notably, Go‘s scheduler does not guarantee fairness, and preemption is limited. For instance, an empty infinite loop will never be preemptively switched away from. Understanding these limitations is crucial for developers, empowering them to make informed decisions when crafting concurrent systems with goroutines at the helm.

Connecting Goroutines with Related Terms: Bridging Concepts

Goroutines, though a unique feature of Go, share conceptual commonalities with other terms in the realm of concurrent programming. Understanding these connections provides a broader perspective on the versatility and applicability of goroutines in diverse scenarios.

- Fibers: Weaving Threads of Similarity

The term “fibers” finds resonance in the context of user-mode-scheduled threads, aligning closely with the user-mode scheduling aspect of goroutines. Much like goroutines, fibers represent lightweight threads of execution managed with minimal reliance on the OS kernel.

- Green Threads: Nurturing Environmental Friendliness

Similar to the concept of fibers, “green threads” refer to user-mode-scheduled threads. The notion of green threads emphasizes the efficiency and environmental friendliness of these threads, mirroring the lightweight and resource-efficient nature inherent in goroutines.

- Coroutines: Coordinated Dance of Execution

Coroutines, with their ability to yield control at arbitrary points in their code, share conceptual similarities with goroutines. Both coroutines and goroutines provide a means of concurrent execution with the flexibility to pause and resume execution at defined points, fostering cooperative multitasking and collaborative performance.

- Event-Driven Architectures: A Symphony of Asynchronicity

Goroutines find a natural home in event-driven architectures, where tasks seamlessly switch to other activities while awaiting asynchronous events such as network I/O. The capability of goroutines to adeptly handle asynchronous tasks aligns harmoniously with the principles of event-driven programming, contributing to the efficiency of systems featuring asynchronous components.

- M:N Hybrid Threading: Bridging the Kernel-User Abyss

M:N hybrid threading, involving a combination of kernel- and user-mode threads, shares conceptual ground with the multiplexing concept employed by goroutines. The migration of user-mode threads across OS threads in M:N hybrid threading resonates with the dynamic distribution of goroutines across multiple OS threads, a strategy employed to maximize parallelism and optimize system resources.

Conclusion: Goroutines as the Architects of Concurrency

In conclusion, goroutines stand as not just a feature but as the architects of concurrency in the Go programming language. Their lightweight nature, user-mode scheduling, and seamless integration with the language contribute to the simplicity, efficiency, and scalability of concurrent programs. To fully harness the potential of goroutines, developers must comprehend their underlying principles and characteristics. As the Go programming language continues to evolve, goroutines remain a testament to its commitment to concurrency, efficiency, and developer-friendly design. They invite developers to embrace a new era of concurrent programming, where the orchestration of tasks becomes a symphony of elegance and efficiency.

Leave a Reply